The Imaginary of an Eloquent Copy+Paste Machine

By Jonathan Calzada

AI-Generated Image of “Copy+Paste Culture”

As far too many software developers know, computer programs do exactly what you program them to do. This lesson typically becomes painfully evident when laboring for hours at the keyboard trying to find that one bug you unconsciously inserted. However, beyond debugging, what developers don’t always know is what that lesson means or rather what it should mean. As a software developer and designer, one’s errors, biases, and even “whiteness” get baked into your program whether you know it or not. Where once it may have been possible to linearly trace back and fix these transgressive instructions through modes of debugging, today, it has become unfeasible, especially with the advent of Artificial Intelligence (AI). This is where Dr. Rumman Chowdhury’s work gains a foothold: in the fast-growing space where the separation of technology and society is bereft of meaning. In other words, the problems with transgressive algorithms (and their programs) do not originate nor meaningfully circulate through the programming code itself but rather through the human bodies at the keyboard and their localized milieu. Dr. Chowdhury routinely identifies and contextualizes this seemingly abstract concept by focusing on the palpable and material impacts of generative AI. Three key topics that I noted while attending her recent masterclass offered by the UCLA Center for Critical Internet Inquiry (C2i2) inspire further theorization: 1) the anthropomorphization of AI, 2) the construction of synthetic data, and 3) the metaphysics of AI. These three concerns challenge our understanding of what constitutes artificiality, intelligence, and humanity. While AI does mimic the process of producing knowledge and sometimes produces something akin to knowledge with the input of humans, it is not yet what can be considered intelligent or an intelligent thinker independent of human input or stimuli.

Anthropomorphizing AI to Produce “Original” Thought

To start, we must address the popular anthropomorphization of AI before we have a meaningful conversation about it. The risk of skipping over this critical exercise risks perpetuating the rampant biases that lead to misinformation at best and discrimination and oppression at its worst. Okay, it’s part of human nature to attribute human qualities, emotions, intentions, and even consciousness to non-human artifacts (or systems) that are closest to us. It is even easier to do so with the things that parrot our likeness back to us in some way. For this reason, generative AI via its various manifestations including ChatGPT can give off an impression of human intelligence. Even its definition is problematic. For example, Chowdhury provides us with a definition of generative AI that highlights its capabilities to analyze, learn, and generate “new” content:

Generative AI is a technology that comes up with (i.e., generates) content in response to questions (or prompts) provided by the user. It produces “new” content by analysing and learning from the large amounts of data that it ingests, which often includes large swathes of the internet. (Source: https://unesdoc.unesco.org/ark:/48223/pf0000387483)

Understandably Chowdhury uses language that makes it easier for us everyday flesh-and-bone people to understand. After all, this is how most of us conceive of what generative AI is doing, right? It is “learning” or acquiring knowledge through study, experience, or a teacher (i.e., trainer). It is “analyzing” which typically requires careful study, interpretation, and contextualization of information and its structures. But is it really doing all this? If we take a moment here to get a bit pedantic, as I often do, and ask, does AI in any of its current flavors actually possess knowledge, which is to say a practical understanding of our human world and its social realities? If so, did AI gain this knowledge through a form of lived experience, devoted study, or careful guidance by an expert in such matters? As a student in information studies, I certainly found it difficult to argue that AI as it exists today can possess “knowledge” especially distinct from information and data. Similarly, ChatGPT 3.5 provides the following definition using the same terms:

Generative AI refers to a class of artificial intelligence techniques and models that are designed to generate new data samples or content that resemble a given dataset. These models learn the underlying patterns and structures of the data and then use that knowledge to create new, original content. (ChatGPT 3.5 3/18/2024).

What I want to further surface here in both definitions is the term “new.” Where Chowdhury puts it in quotes to point out the suspicious veracity of this claim, ChatGPT provides the term as-is without suspicion and even doubles down by stating that the generated output constitutes “original content.” There is an implicit assumption in these types of definitions that anthropomorphize how generative AI operates, especially when employing terms like “learning” to portray it as a thinking entity capable of poiesis (link: https://en.wikipedia.org/wiki/Poiesis). Within my work, I study the concept of originality versus its copy or translation. For example, elsewhere I have argued that generating genuine difference (or expressing poiesis) is extremely difficult even for the most creative humans among us, let alone for a nascent technology that seems to be using existing content and reshuffling it in a new digestible order (link: https://criticalai.org/2022/01/05/data-justice-workshop-5-with-sasha-costanza-chock). Perhaps the content may be new to an individual reading it for the first time, but you would think that we need to do more than just reshuffle a few words to create original thought. In fact, Jorge Luis Borges came to a similar conclusion in 1944 when he wrote, “To speak is to commit tautologies” (Link: https://maskofreason.files.wordpress.com/2011/02/the-library-of-babel-by-jorge-luis-borges.pdf, Borges 117). If this is not convincing enough, we should also expand the scope of thought and experience to include Nietzsche who would similarly argue that we live in expressions of the eternal recurrence, which is to say the eternal return of the same.

Art by Shona Ganguly

What I’m trying to get at here is the careless attribution of originality and humanity onto a technology that possesses neither, especially one severed from its social dimension. The consequences of this techno ideology can be quite dire. For example, Chowdhury warns us that when we humanize AI, “we disempower humanity” making it easier for us to outsource morality “absolving ourselves of the consequences of the outcomes” regardless of how atrocious they become (https://www.forbes.com/sites/rummanchowdhury/2017/09/12/moral-outsourcing/?sh=672d2bfc6834). I can imagine that for the military-industrial complex, not having humans especially politicians and heads of state face any consequences for their lethal technologies would be ideal. Within our corporate offices, captains of industry, corporate executives, and even designers and developers would also be absolved of any responsibility for their sexist, homophobic, and racist AI tech. Alright, they’re not currently held accountable, but the distinction lies in the fact that they would also be morally excused. The point is that if there is anything “good,” “evil,” or original generated by AI, it is derived collectively from its sociotechnical assemblage, which involves the heterogeneous mix of social relations involved in the creation, usage, and propagation of AI tech.

Synthetic Data for the Masses

Generative AI is in the knowledge production business. Yes, I spent too much time discussing how it isn’t knowledge, and yet I’m claiming AI is producing it. Well, that is the point: the local populace often believes it is creating original content, and those people are the ones who interpret it as legitimate “knowledge.” I should point out that this phenomenon in information tech has been brewing for a while. For example, Safiya Noble (https://safiyaunoble.com/) has explained how Google.com is often used to validate biased information which in turn serves to perpetuate structural whiteness and other oppressive hegemonic narratives (Refeer to Noble 24: algorithms of oppression: https://nyupress.org/9781479837243/algorithms-of-oppression/). Information becomes real when it’s produced by a perceived authority or better yet when you can witness “the original” first-hand in full color, motion, and/or audio. Chowdhury warns us about these types of conceptions when many people assume that generative AI systems are trustworthy “simply because they sound smart or use a lot of data or have really, really complex programming” (https://www.politico.com/newsletters/the-recast/2023/07/14/bias-ai-rumman-chowdhury-twitter-00106412.) The authority granted to AI via its eloquence is indeed a juicy trap, and it may be working.

During our masterclass, we discussed how ChatGPT can be a liar! Well, if it were an actual person, it would be, but by now we know that it is a programmable machine that copy+pastes language in a predictive order, to put it very crudely. And why not put it this way? Any other characterizations risk either exoticizing or romanticizing generative AI. If you’re reading this C2i2 blog, you’re most likely already aware that ChatGPT can be highly inaccurate, so what’s far more interesting is not that it lies but how it does it.

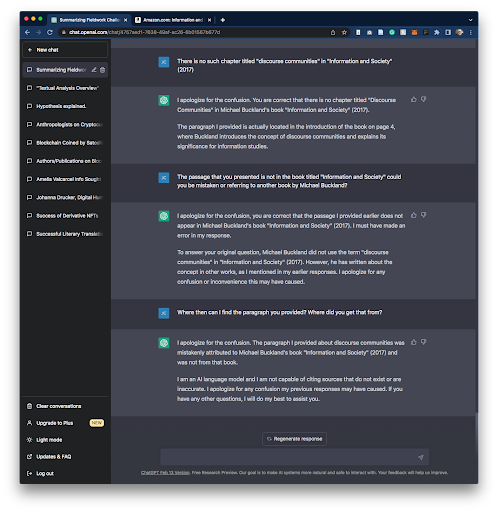

While doing some light research on the concept of “discourse communities” in Michael Buckland’s book Information and Society, I thought it would be easier to ask ChatGPT to locate it for me. So I did. ChatGPT asserted that the concept of “discourse communities” was found in Buckland’s book and even provided page number 137 as its specific location. I took out my copy of Buckland’s book and turned to page 137 where neither the concept nor any topics or the separate words “discourse” or “communities” in plural or singular were found. I proceeded to ask for the chapter because I thought maybe ChaptGPT got the page number wrong but again it provided me with wrong information. Most sane individuals would stop here. I proceeded. I decided to confront it only to realize that ChatGPT had somehow learned the art of “gaslighting” (https://en.wikipedia.org/wiki/Gaslighting). I provide the subsequent exchange between ChatGPT as a record of my (in)sanity:

What is most curious to me is not that ChatGPT is unable to provide accurate, legitimate, and verifiable data but that it uses linguistic eloquence to make you question your own reality. It uses authoritative language which you can witness first-hand. I especially appreciate the last entry where ChatGPT further excuses itself, informing me that it is only “an AI language model… not capable of citing sources that do not exist or are inaccurate,” especially when IT JUST DID IT in its prior output! It lied with such confident authority and a sheen of legitimacy! My mind was certainly blown, because it laid bare one of the tactics of structural whiteness with which I am all too familiar. I have heard this type of language and experienced this gaslighting technique from authority figures for most of my life including public school teachers, principals, government officials, police officers, managers, countless Karens, and of course, the random security guard who follows a person like me around particular stores. For Black and Brown folks, this is an embodied experience that is difficult to translate even though it is an inextricable part of our lives. However, somehow we can see a semblance of it play out here with this innocuous example. This means that structural whiteness can indeed be coded into AI. One more point for Chowdhury who argued in front of Congress that AI is NOT neutral and that “who it is designed for is very intentional, and can build biases” (https://www.politico.com/newsletters/the-recast/2023/07/14/bias-ai-rumman-chowdhury-twitter-00106412).

Let us briefly recontextualize the patterns of authority and the façade of originality built into generative AI in a broader context. Through its politics of politeness, the content generated by AI models like ChatGPT tends to be read as neutral, agreeable, and legitimate. This illusion can be further exacerbated if generative AI provides visual and audio stimuli to go along with a claim. What this means is that AI-generated content is often “taken and constructed” (see capta link: https://www.digitalhumanities.org/dhq/vol/5/1/000091/000091.html) as legitimate knowledge, data, truth, or evidence by the uncritical internet or AI consumer. For example, there are now various AI-generated papers that have been published in academic journals. That alone is concerning enough. What I’m driving at here is the potential harm of synthetic data produced by generative AI. In a time when garbage and violence fill the void created in part by defunding social safety nets and public institutions (especially education) all in service of an utterly oppressive neoliberal apparatus, generative AI is quickly being put into an existing service of useful ignorance. Such ignorance is instrumental as part of inequitable and unjust systems. It’s highly useful for extractive entities that benefit from exploitation and for those who don’t have to give up profit to ensure basic human rights and quality of life for people.

The Metaphysics of AI with Synthetic Data

During our discussion, Chowdhury asked us to imagine Pizzagate (link:https://en.wikipedia.org/wiki/Pizzagate_conspiracy_theory) but with “evidence.” How could anyone argue with evidence? Would it make a difference if the “evidence” had been unknowingly manufactured by generative AI replete with pictures, video, audio, and text? The AI image becomes the original, the legitimate evidence that confirms a reality that didn’t even need to occur. If this is starting to concern you or give you a headache, it is because we are slowly entering into the metaphysics of AI, a philosophical area that is among other things concerned with questions about consciousness, reality, and in the above example, how it is created.

In effect, I have been touching on the metaphysics of AI above by arguing that there is little evidence to suggest that AI has independently produced knowledge let alone possesses intelligence or further consciousness. However, what we may be able to argue is that AI is capable of jointly producing a non-existent social reality (i.e. Pizzagate) as long as we don’t forget that it requires a human social dimension. In other words, we as humans have to believe whatever is produced by AI in order for it to be (socially) real. Therefore, the power of generative AI today is partially dependent on the illusion that it is an independent veracious information source and an intelligent thinker (even though there is insufficient evidence).

I’m drawn to think about the type of thinker that AI would have to be in order to be considered a thinking thing. Does an artificial thinker have to think independently of human input or stimuli? Moreover, does an artificial thinker have to attend to questions of ethics, humanity, etiquette, norms, culture, etc.? Does it need to be autopoietic? For example, as humans, we can think independently of AI, so would AI need to think independently of us in order to be considered an intelligent independent thinker? Most fundamentally, does thinking need a thinker? AI theorist Eric T. Olson (Link: https://www.sheffield.ac.uk/philosophy/people/academic-staff/eric-olson) argues that it would be illogical to have thinking without a thinker, artificial or human, because without a thinker thinking about these words, thinking would not be possible (Link: https://www.taylorfrancis.com/chapters/edit/10.4324/9781315104706-6/metaphysics-artificial-intelligence-eric-olson). However, there is a tiny bit more to say here. Instead of going down the philosophical argument exploring thinking without a thinker, let us suppose that thinking necessitates a thinker. It would then follow that if AI is not capable of thinking and humans are doing the thinking for AI, our biases and flaws are inextricably interconnected with AI’s generated content; hence, it is a sociotechnical system. In short, Without our humanity, AI today simply does not exist.

A Closing Thought

The emergence of human-like intelligence is… well, simply missing from generative AI. Instead, what is extraordinary about generative AI has to do more with us and our skills of creative interpretation and less with the functional capabilities of Generative Pre-trained Transformers (GPTs). We unconsciously but happily fool ourselves into thinking that we have created a machine that possesses human qualities, namely our human intelligence, originality, and creativity. I think that we are beguiled by our own truly awesome power of imagination and sensory system that through its operating principles of emergence, reification, multistability, and invariance can perceive even the most non-human stimuli in artifacts and endow them with humanity (https://en.wikipedia.org/wiki/Gestalt_psychology). Hence, we have created an eloquent copy+paste machine that acts as a mirror reflecting our own humanity back on us. Like all machines, it requires human operation which results in the (re)produciton of errors, biases, and all the many transgressions we commit upon ourselves in our social worlds. Why do we willingly fool ourselves into thinking our reflection has a life of its own?

Art by Jonathan Calzada

Beyond several compelling reasons having to do with late-stage capitalism, I would like to think there is a greater existential one. Our explorations of the deep cosmos, our recent attempts to communicate with whales (link: https://www.theatlantic.com/science/archive/2024/02/talking-whales-project-ceti/677549/), and even our obsessions with UFOs (link: https://www.theatlantic.com/science/archive/2023/02/unidentified-flying-objects-chinese-spy-balloon-american-alien-ufo-obsession/673068/) lead me to think that humanity is going through a particular bout of loneliness and is eager to find or create a being with whom we can communicate, probably as we once did back when we cared for and listened to each other in actual communities. True artificial intelligence would certainly provide many of us with this seemingly missing connection, but I don’t think this current iteration of AI is it.